Introduction

One fine early morning around 5 AM (just what every on-call engineer dreads), we got an alert that one of our core services (let’s call it P1) was experiencing high memory utilization.

The memory utilization alert is triggered when usage exceeds 75% for more than 5 continuous minutes. This was the first time we'd received this alert. The general numbers for memory utilization are somewhere between 50% and 60% for us.

While our on-call engineer was responding to the alert, our co-founder and product head, Ashwin, mentioned that his E2E tests were running from 5:00 - 5:30 AM (Yes, he likes tests; so much so that he writes about them on Linkedin. Click here for a quick read ).

After an hour or so, we received one more alert of the same thresholds being breached for another service (let’s call it A1). That’s when we realized that these events didn’t happen in isolation and we may just have a cascading effect.

As a precautionary measure, we restarted service P1 and rolled back service A1 to the previous state since there was a recent deployment to service A1.

We decided to be in standby mode and watch it closely, observing what happens. There were no errors reported yet, so we decided to wait.

Persistent memory growth that wouldn’t back down

To set some context, we had set the heap memory to be 75% of the total memory allocated to the pod.

We got together to debug this issue, and requested that the E2E test be kept in a running state. Since the pod was restarted, the memory utilization went down, and after a few hours, we received the same alert.

The memory utilization of service P1 kept growing and reached a staggering 90% now (a new high score); there were still no errors seen in the services. We decided to increase the alerting threshold to 95% and observe for a little longer.

The following day (this time at a reasonable hour) the 95% limit was also breached.

We hadn’t changed any code recently; the only change that we did was to increase the CPU to address the cold start problem.

Coming back to the problem, we restarted the service to avoid running into Out-of-Memory (OOM) exceptions. The problem was averted for the next few hours. The on-call engineer was also instructed to restart the service if the alert was raised again.

Dump that heap memory

As a first step in debugging, we wanted to see what was happening with the heap memory. Our first check was to see if there were any leaks.

However, we did not find anything conclusive from the heap dump. We used visualVM to analyze the heap dump file, but the heap dump was all clear.

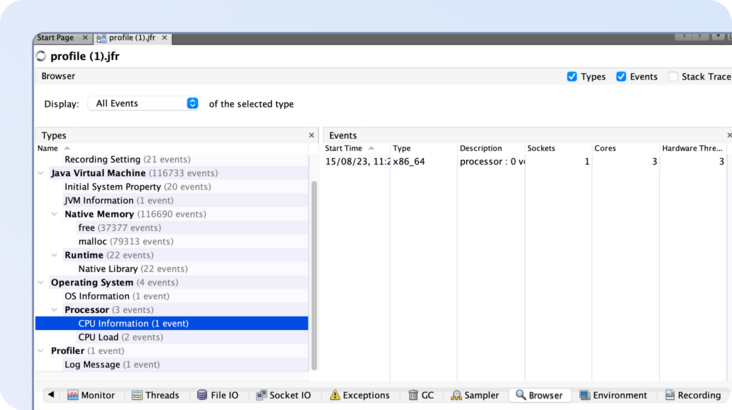

As the next step, we decided to enable JFR (Java Flight recording) and NMT(Native Memory Tracking) to debug this further. We also read articles1 and notes online that pointed to jemalloc to detect memory leaks. Therefore, we tried jemalloc to solve the issue at hand as we were not able to pinpoint the issue.

JFR & NMT: Unravelling memory mysteries

We decided to move our debugging efforts to our staging/testing environment. We figured that the memory issue happens when there are a large number of requests being sent to the service (evidenced by the E2E tests). Service P1 and A1 are the most used services in our system and we we didn’t want to wait long to reproduce the issue.

We ran constant load tests in our staging environment to reproduce the issue quickly using Locust, a Python-based load testing tool.

Memory utilization kept increasing as the load test was running, so it was fairly easy to reproduce. We then took the next step and enabled JFR and NMT. We decided to revert our only change (the increase in CPU allocation to the pods to 3 cores). We went back to 1 core and observed NMT data. There was nothing abnormal happening here, and we couldn’t find any glaring evidence of a leak. We increased the CPU allocation again back to the higher value of 3 cores and found that the memory consumption had increased compared to the previous CPU allocation.

Upon reviewing the JFR data, we identified two key patterns of interest:

- Many threads were being created, and they were all alive

- The number of malloc call events was more than 2X the number of free call events. (This doesn’t imply that there was a problem, but just something to make note of)

Threading lightly to reduce the threads

We found that the number of worker threads in our Armeria HTTP server was set to over 200. (Why was the number so high? Well, we will talk about it in another blog soon enough; we’re not going to waste a chance to make another post by covering it here.)

The high thread count leading to high memory usage is a reasonable assumption. So we reduced the number of worker threads to this - max (10, number_of_processors*2).

Why did we choose the number 10?

Because 10 is the magic number, the perfect balance between performance and resource utilization. Many things are 10 in Xflow - the database pool size limit, and list API page size limit, to name a few. Our product is a solid 10/10 too.

Eventually, we reduced the threads and tried running the load tests. But, we couldn't solve the issue even after changing the thread count to an almost philosophical number 10.

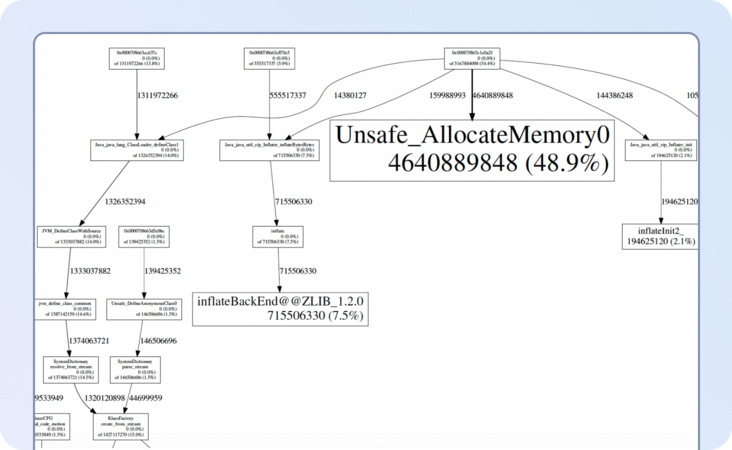

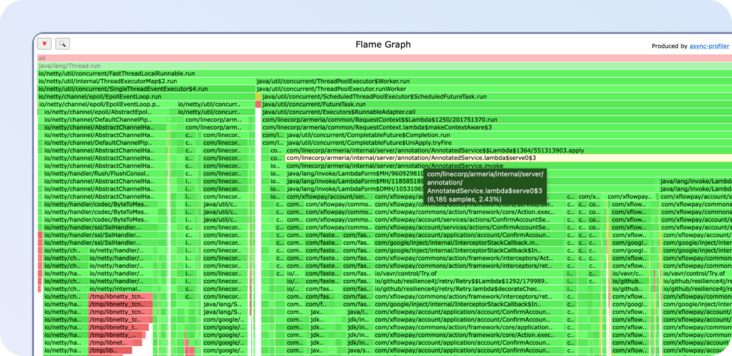

The memory usage of the pod kept increasing, and they hit OOM and died. While we made this change, we also enabled jemalloc profiler and async-profiler (to produce a flame graph).

The culprit

So far, we weren’t able to pinpoint the root cause of the issue.

When we did the previously mentioned changes for debugging, we also set the io.netty.leakDetectionLevel=paranoid option to see if Netty is leaking any memory.

We could not find any logs from Netty indicating memory leaks. We suspected Netty because both jemalloc profiler graphs and async-profiler pointed to Netty calling malloc the most.

But Netty was innocent.

We then stumbled upon arenas in memory allocators.

Arenas are a region of memory used to allocate memory from. It's designed to improve performance in multi-threaded applications by reducing contention and increasing parallelism.

We found out that in 64 bit systems, the default number of arenas is 8*number_of_cores.

So the number of arenas increased to 24 (8* 3 cores) from 8 (8*1 core) - quite the increase.

We discovered an environment variable MALLOC_ARENA_MAX that you can set to limit the number of arenas created. We tried setting MALLOC_ARENA_MAX to 4 and tried running the load test.

Lo and behold, the memory growth slowed down to a screeching halt. We tried with an even lower value of 2 and it slowed down even more.

By limiting the number of arenas, we imposed cosmic order upon the chaotic realm of memory allocation. A small act, a profound impact.

Arena wars: The rise of glibc and the malloc menace

At the heart of our memory issue lies glibc’s malloc implementation, which uses arenas to manage memory allocation in multithreaded applications. Here’s how it works and why things went awry:

- Arenas and parallelism: Arenas are separate memory regions allocated by glibc to minimize contention in multithreaded environments. Each arena handles allocations independently, improving performance by reducing locking overhead. By default, the number of arenas scales with the CPU cores (8 * number of cores on 64-bit systems) as mentioned earlier.

- Memory retention: When memory is freed, it isn’t returned to the operating system immediately. Instead, it remains within the arena for future allocations. This design choice optimizes speed but at the cost of memory overhead.

- Fragmentation woes: Freed memory within an arena can become fragmented, creating small unusable gaps. As allocations and deallocations pile up, arenas hold onto more memory than they use, which isn’t reclaimed.

- Netty’s role: Netty, heavily used in our HTTP server, calls malloc frequently to allocate memory buffers. This caused extensive fragmentation across multiple arenas. Combined with our increased CPU allocation (and hence more arenas), memory was spread thinly across arenas, inflating usage further.

In conclusion, the persistent memory growth and eventual OOM kills were a consequence of glibc’s arena-based allocation, fragmented memory, and Netty’s allocation pattern. By tweaking the number of arenas (MALLOC_ARENA_MAX), we found a way to control memory usage.

Jemalloc: Saving our memory, one arena at a time

Even though we were able to control the memory growth in glibc by limiting the number of arenas, we decided to replace glibc’s malloc with jemalloc. The decision was driven by jemalloc’s superior memory management capabilities, which align better with our needs for efficient memory usage, proactive memory reclamation, and detailed diagnostics. Here’s why jemalloc was the ideal choice for our setup:

- Better fragmentation handling: Jemalloc’s use of per-thread arenas significantly reduces memory fragmentation compared to glibc’s globally shared arenas. This leads to more efficient memory allocation and minimizes wasted memory, ensuring smoother performance during high-load conditions.

- Proactive memory reclamation: Unlike glibc, which tends to hoard freed memory for future reuse, jemalloc actively returns unused memory to the operating system. This proactive memory management helps maintain a stable memory footprint over time, preventing memory bloat and reducing the likelihood of Out of Memory (OOM) conditions.

- Detailed diagnostics: Jemalloc provides powerful profiling and debugging tools like heap profiling, making detecting and resolving memory leaks or unusual allocation patterns easier. This is a significant advantage over glibc, which lacks such detailed diagnostics, making it harder to identify and fix memory-related issues.

- Netty compatibility: Jemalloc is highly compatible with libraries like Netty, which often allocate and deallocate small memory buffers. Its efficient memory allocation strategies align well with Netty’s patterns, reducing overhead and optimizing performance in memory-intensive scenarios.

By choosing jemalloc, we addressed key challenges presented by glibc in managing memory efficiently in a multithreaded environment, leading to a more stable and performant application on Kubernetes.

Learnings

- Configuration changes often have cascading effects. Always test with real workloads.

- Choose memory allocators that suit your workload to avoid surprises under pressure.

- Invest in retrospective-friendly monitoring to learn from incidents.

PS: There’s more. Please watch this space for Part 2 of the debugging issue

Notes:

More engineering blogs by Xflow: